WormGPT Hacking AI Breach Raises Questions on Criminal AI

-20260210113555.webp&w=3840&q=75)

Hoplon InfoSec

10 Feb, 2026

Did someone really break into a criminal AI tool? Why should anyone care?

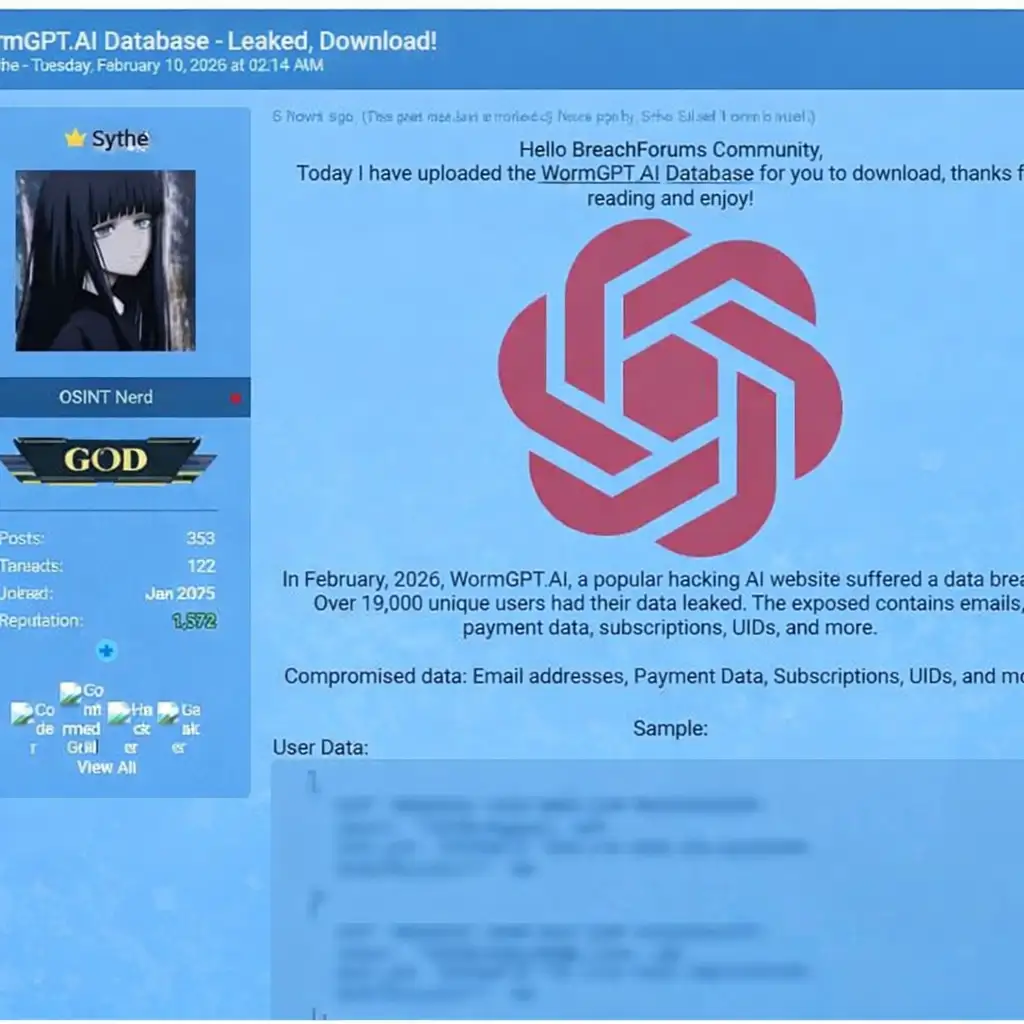

In the beginning of February 2026, a hacker said they had gotten access to internal data related to WormGPT, an AI tool that is known for helping criminals. The WormGPT hacking AI breach, which is now widely talked about, has raised new questions about how safe underground AI platforms really are and what happens when tools made for crime turn on their own users.

The person who made the claim said they got access to a database linked to WormGPT and then tried to sell the data on secret forums. No independent security company has confirmed that the leak is real, but the claim has sparked a lot of talk in the cybersecurity community.

This is important right now because WormGPT is part of a growing trend. AI is no longer just helping businesses get things done faster. Criminals are also changing, shaping, and abusing it. When a platform like this is hacked, it shows not only technical flaws but also how fragile trust is in cybercrime ecosystems.

What is WormGPT, and why is it getting so much attention?

A lot of people say that WormGPT is an AI chatbot that focuses on cybercrime. WormGPT was sold on underground forums as an unrestricted system, unlike most AI tools that come with safety filters and usage rules. The people who sold it said it could make phishing emails, scam scripts, and bad code without any moral limits.

WormGPT has been called a "shortcut" since it first showed up on criminal forums in 2023. Users didn't need to know a lot about technology. All they had to do was tell the AI what they wanted, and it would help them write convincing content. Less experienced attackers liked the platform because it was easy to use.

Defenders were never just worried about the software itself. It was the size. WormGPT and other tools make it easier for more people to do advanced social engineering. The WormGPT hacking AI breach has gotten a lot of attention from security experts for this reason.

(WormGPT Database Leak Claim)

What exactly is being said about the WormGPT hacking AI breach?

The current claim is based on a database that is said to be linked to WormGPT's internal systems. Screenshots shared on secret forums show that they can get to user data and backend data. Some posts hinted that chat logs or account information might be included.

At this point, there is no official confirmation. Cybersecurity News makes it clear that the breach has not been confirmed by anyone else. This difference is important. Sometimes, claims made on underground forums are blown out of proportion or used to sell fake data.

But the fact that there could be a breach brings up a known problem. Criminal platforms don't often put money into good security measures. They often move quickly, use the same infrastructure again, and put profit ahead of safety. In that light, it's not hard to picture a WormGPT hacking AI breach.

Why would someone want to attack a criminal AI platform?

It may seem ironic to hack a criminal service, but it happens all the time. These platforms have useful information. In underground markets, user accounts, prompts, and payment information are all worth something.

Reputation is also a factor. Compromising a well-known service can make a threat actor more important. In some cases, attackers use stolen data to blackmail users or operators.

In underground ecosystems, trust is everything. When that trust is broken, platforms often fail, change their names, or go away completely. That's why the WormGPT hacking AI breach could affect more than one tool.

How to make criminal AI platforms and where they fail to protect themselves

Most underground AI tools are not made from scratch. They are often based on open-source language models that have been changed to get rid of safety features. After that, simple web dashboards or bots are used to wrap these models.

The infrastructure that supports them looks familiar. Cloud hosting, servers, databases, and APIs. The only thing that sets them apart is discipline. There are often problems with logging, encryption, and access controls.

Some common problems are databases that are open to the public, passwords that are used again, and not enough monitoring. If any of these problems were present in WormGPT's environment, a breach wouldn't be surprising. The WormGPT hacking AI breach is similar to other breaches that have happened in underground services.

If the claims are true, what kind of data could be made public?

If the breach is real, the data that was leaked could include basic account information like usernames or subscription details. Another option is prompt history. That information could show how the tool was used and what kinds of attacks or scams were being planned.

There may also be payment information, but a lot of underground sites use cryptocurrency. Wallet addresses can also be sensitive because they may be connected to other transactions.

It's important to be careful. These details are still just guesses until they can be verified by someone else. Cybersecurity News has stressed that it has not been confirmed that the WormGPT hacking AI breach is real.

-20260210113555.webp)

A simple way to understand how it affects

Think of a service that helps criminals make a lot of fake documents without making any noise. Now think about what would happen if someone broke into that service and stole all of the customer records. People would quickly start to panic.

That's pretty much what a breach like this means. People stop trusting them. Operators rush to answer. Competitors take advantage. Even if the service stays around, its reputation may not.

This is why crimes that happen in criminal ecosystems often have big effects.

How this fits into the bigger picture of AI cybercrime

Scams are already being written differently because of generative AI. Phishing emails look better. Messages are more specific to the area. Scams are no longer giving themselves away with mistakes.

WormGPT became a symbol of this change because it openly said that it had no limits. The WormGPT hacking AI breach brings to light a truth that isn't talked about very much. Criminals also have to deal with more complicated and risky situations because of AI.

The more data you have, the more exposure you have. More infrastructure means more places for attacks to happen. AI doesn't stop operational errors.

What this means for people and businesses in their daily lives

Most people never used WormGPT, but they may still feel its effects. Scammers use tools like this to make their scams more believable for employees, customers, and businesses.

If one platform goes down, it might slow things down for a while, but history shows that replacements come up quickly. There is still a lot of demand for automation.

This event reinforces advice that organizations already have. Teach users how to spot phishing. Use multiple layers of protection. Keep an eye on trusted sources of threat intelligence. The WormGPT hacking AI breach doesn't change the basics, but it reminds us why they are important.

What security teams should really pay attention to

Don't worry. Security teams should keep putting awareness and detection at the top of their list. AI-generated phishing still needs people to be involved.

Email filtering, endpoint protection, and planning for how to respond to incidents still work. Learning about trends in criminal AI can help you get ready, but it can't help you guess what will happen.

The WormGPT hacking AI breach should be seen as another sign of a long-term trend, not a sudden change.

Questions People Are Asking

Is it true that the WormGPT database has leaked?

No. There is no independent confirmation of the leak as of February 2026.

What is WormGPT used for most of the time?

It has been advertised as a way to make phishing content and scam scripts without any limits.

Will this slow down AI cybercrime?

Maybe for one platform in the short term, but other tools like it are likely to come out.

Are real AI companies linked to WormGPT?

No. It is thought to use modified open source models that are not officially linked to it.

Should companies do something new now?

There is no need for any new action other than strong phishing defenses and teaching users.

What happens next

If the breach turns out to be real, WormGPT could close down, change its name, or just go away quietly. In the past, that has happened a lot.

AI will always be used in cybercrime. Some new tools will be safer than others, while others will still have problems.

It's not about WormGPT itself that we should learn. It's about how fast technology spreads and how often people don't pay attention to basic security.

Final thought

The WormGPT hacking AI breach shows us that even platforms made for criminals can make the same mistakes as any other online service that is rushed. Even though the claims haven't been proven, the conversation about them makes it easy to see the risks, limits, and contradictions of criminal AI. The best thing to do is to stay informed, be careful, and stay grounded.

Share this :